Lies, Damn Lies, and Conversion Optimization

We all make the same mistake. We fall in love with our business website. Conversion optimization isn’t even on the radar.

We assume that prospective customers who visit our website will find it brilliant, intuitive, and captivating. And we are shocked (shocked!) to find out otherwise.

Yes, you should build your website based on your own inspiration and web design best practices. But recognize that this is just a best guess.

If you want your business website to engage and convert the maximum number of customers, there’s one thing that you MUST do.

YOU MUST TEST

The 5-Step Conversion Optimization Process for Business Websites:

- Bring targeted prospects to your business website

- Run a split test with different page variations

- Gather statistically significant data about your conversion target

- Pick the winner

- Repeat

Conversion optimization (aka “conversion rate optimization”) is the highest-confidence way to test and continually improve your business website.

[A “split test” requires randomly showing different page variations to different sets of visitors. A “conversion target” could be an opt-in, a download, a purchase, etc.]

See below for some very helpful online tools for running conversion rate optimization tests.

But first, here are the 4 biggest conversion optimization mistakes that businesses make. Avoid them at all costs!

Conversion Optimization Mistake #1:

Gathering Opinions

Opinions exist inside your company. Facts, however, exist beyond its walls.

You probably know better than to seek the HIPPO – the Highest-Paid Person’s Opinion. (In case you don’t, this site is for you.) But do you also appropriately discount your customers’ opinions?

Your customers’ opinions about your business website are rarely helpful.

“Do you like this design?” “Sure.”

“Would you click this link?” “Probably.”

“Does this page make sense to you?” “Oh, of course.”

Rubbish. You can’t determine how customers will behave by asking them. There are too many biases, too much desire to please, and too much self-interest in sounding smart.

Most importantly, we all have a very poor conscious understanding of the subconscious elements that actually drive our decisions. We can’t consciously explain why we chose the chocolate sprinkles over the rainbow sprinkles, and we can’t consciously explain why we chose to click the button on your web page.

We make such decisions instinctively, and then try (and fail) to justify them logically after the fact.

The only way to determine how your customers will behave in a given situation is to put them in that situation and observe them.

Conversion rate optimization is about running real-life experiments, not collecting opinions.

An Important Alternative

That being said, there are some effective ways of putting customers “in that situation” other than with conversion rate optimization.

Live user testing, when well-orchestrated, can yield amazing insights. For this I always use www.UserTesting.com. Try it out.

The best way is to run one test at a time, learn from it (you’ll learn a ton), and then run another.

Conversion Optimization Mistake #2:

Testing too Small

You should test orange and green button colors, or “Buy Now” vs. “Add To Cart”, or “Fastest Widget” vs. “Highest Performing Widget” and see which convert better, right?

That’s perfectly fine… *if* (and this is a big “if”) you’ve already tested all bigger changes.

Conversion optimization is like climbing a mountain. Testing small changes could move you either up or down the mountain, and eventually you may reach the top.

But testing big changes can move you to entirely different mountains, some of which will lead to much greater heights.

Get on the best mountain first, then climb your way to the top.

Test radical web page differences first, then refine.

Conversion Optimization Mistake #3:

Testing Sequentially

So you’ve designed a radical new web page. You then replace your current homepage with it and run a test. You find a 50% increase in conversions. Awesome. You have a winning design, right?

Maybe, but you really don’t know! When you run tests in sequence, you invite all sorts of trouble:

- You may have hit a lucky time period. Vacations, school schedules, seasons, moon phases, and dozens of other factors that influence visitor behavior are cyclical in nature.

- Other things on your site might also have changed. What if those changes accounted for the effect?

- Your traffic mix might have changed. Have your Google organic search placements increased or decreased? Have you tweaked your Adwords account, or have your Adwords Quality Scores changed? Did you run a Facebook promotion?

And the list goes on.

The point is simple. Unless you test your pages simultaneously, driving a portion of customer traffic to all variants at once, you can’t trust your conversion rate optimization data.

Conversion Optimization Mistake #4:

Ending the Test Too Soon

So you run a simultaneous split test and see after a week that variant B converts 30% better than variant A. Awesome! Pick Variant B and move on, right?

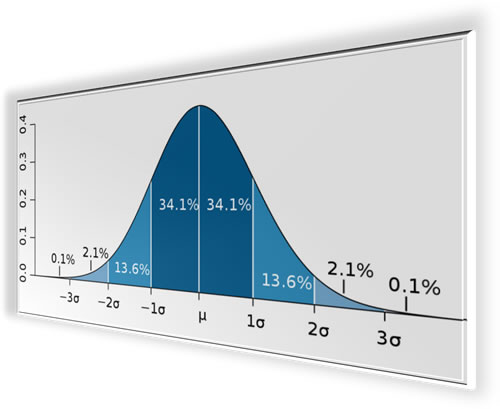

Keep your pants on. Seeing a higher conversion rate only matters if you’ve captured enough data to reach statistically high confidence.

For much real-world testing, a 95% confidence level with p-value below 0.05 is considered good enough. This extremely helpful Split Test Calculator page explains what the heck I just said.

Roughly speaking, this confidence level means that if we ran the experiment repeatedly, we’d find the same winning variant 19 times out of 20. That’s good enough confidence for most business website testing.

Whatever confidence level you decide is right for your business, stick with it. Don’t end tests early just because you like the result so far.

Wait for statistically significant data.

Why You Must Understand Statistics

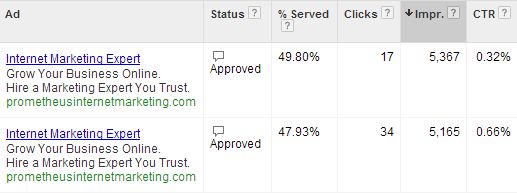

Here is a sample split test that I ran with two different Adwords ads:

The data looks compelling — twice the CTR (Click Thru Rate) for a large data set.

Plug this data into the Split Test Calculator, and you’ll find that the 2nd ad leads to an expected 108% improvement (ranging from 19% to 176%) with a 95% confidence level and p-value of 0.012.

Case closed! We have a winner.

But wait a minute. Did you notice anything funny about the ads?

Look again. The ads are identical!

In fact the difference I was testing in this experiment was the destination URL, which differed between the ads. But you can’t see that from the ads as displayed by Google. Visitors effectively clicked on the identical ad at statistically significant different rates.

What gives?

Well, remember that this is all about probability, not certainty. I happened to record a data run that was likely to occur once in 83 trials (based on the p-value of 0.012). For the prior time period, the ad CTRs were nearly identical (0.48% and 0.51%) as would be expected.

So even with high-confidence statistics, we’ll sometimes reach a wrong conclusion. Don’t lose sleep over it. Trust that this process will work more often than it fails. And if you really don’t trust (or don’t like) an outcome, run the experiment longer to gain more confidence.

SUMMARY: The Right Way to Perform Conversion Optimization on Your Business Website

- Don’t listen to opinions

- Test big changes first

- Perform simultaneous split testing

- Wait for high statistical confidence

BONUS: The Right Tools for the Job

Run simultaneous conversion optimization split tests:

- Google Anlytics Content Experiments — My #1 choice for most businesses for its simplicity and full integration with Google Analytics

- Optimizely or Visual Website Optimizer — If you require more advanced testing options, such as multivariate testing

Analyze your statistics more fully:

- ABBA Split Test Calculator — Determine the significance of your test data

- A/B Test Significance — Determine how many data points you’ll need to reach significance

Other recommendations? Disagreements? Please comment below!

I haven’t started testing yet. Not with my latest site yet, anyway, which is my company blog. Technically, it isn’t even ready to be seen by people. But, I’m promoting it anyway!

Obviously I look at site stats and Google stats. But, they don’t really tell me much. I do agree, people’s opinions aren’t really worth that much. I will check out some of your other resources.

Thanks,

Josh

Hi Josh — Thanks for your comment.

One thing I glossed over in this post is the need to clearly define your conversion goal. Do you want people to contact you? To buy something? To read 3 or more blog posts? To “Like” your page?

Once you define the specific conversion goal that you are optimizing for, then the proper stats to analyze it will usually become clear.